On Thursday, OpenAI rocked the world of artificial intelligence again with a video generation model called Sora.

The demo is based on simple text prompts, showing realistic footage with clear detail and complexity. A video According to the prompt “Reflection outside the train window as it travels through the suburbs of Tokyo” looks like it was shot with a cell phone, including shaky camera work and reflections of train passengers. No strange twisted hands are visible.

Tweet may have been deleted

Prompt video, “Movie trailer about the adventures of a 30-year-old astronaut wearing a red wool knitted motorcycle helmet, blue sky, salt desert, cinematic style, shot on 35mm film, bright colors” Looks like Christopher Nolan-Way Anderson’s hybrid child.

Tweet may have been deleted

Another golden retriever puppy playing in the snow features soft fur and fluffy snow so realistic you can reach out and touch it.

The $7 trillion question is, how does OpenAI achieve this goal? We actually don’t know because OpenAI has shared almost no information about its training data. But in order to create such an advanced model, Sora requires a lot of video data, so we can assume that it is trained on video data scraped from all corners of the web. Some have speculated that the training materials contain copyrighted works. OpenAI did not immediately respond to a request for comment on Sora’s training materials.

8 wild Sora AI videos generated by the new OpenAI tool you need to see

OpenAI’s technical paper focuses on methods for achieving these results: Sora is a diffusion model that converts visual data into “patches” or pieces of data that the model can understand. But the source of the visual material is rarely mentioned.

OpenAI said it “takes[s] Large language models are inspired by generalist capabilities gained through training on Internet-scale data. ” The incredibly vague “Get Inspired” section is the only place to avoid mentioning the source of Sora’s training material. OpenAI further states in the paper that “training a text-to-video generation system requires a large number of videos with corresponding text subtitles.” The only source of large amounts of visual data can be found on the web, which is another hint as to where Sola comes from.

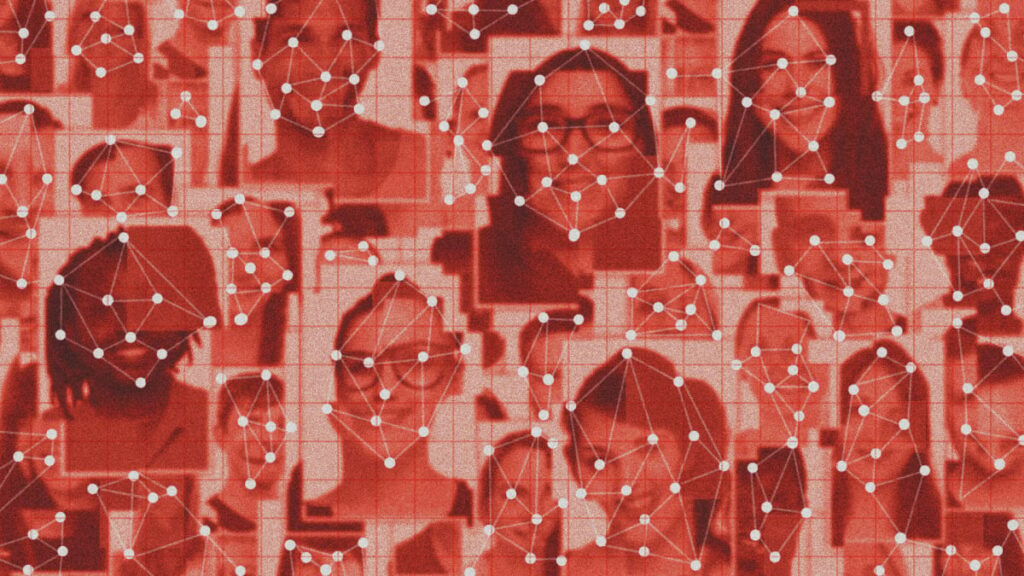

Since OpenAI launched ChatGPT, legal and ethical issues on how to obtain AI model training data have always existed.Both Open artificial intelligence and Google Accused of “stealing” data to train their language models, in other words, using data scraped from social media, online forums like Reddit and Quora, Wikipedia, private book repositories, and news websites.

Until now, the rationale for getting training material from all over the internet was that it was publicly available.but public not always translated to the public domain. for example, New York Times yes sue OpenAI and Microsoft accuse OpenAI of copyright infringement era‘Writing word for word or misquoting a story.

Now it looks like OpenAI is doing the same thing, but for movies. If that were the case, entertainment industry heavyweights would surely comment on it.

But the problem remains: We still don’t know the source of Sora’s training material. “The company (despite its name) has been tight-lipped about what they train their models on,” wrote Artificial intelligence expert Gary Marcus testified at a hearing of the U.S. Senate Artificial Intelligence Oversight Committee. “Many people have [speculated] There’s probably a lot of stuff out there that’s generated by game engines like Unreal. I wouldn’t be surprised if there’s a lot of training and all kinds of copyrighted material on YouTube as well,” Marcus said, before adding, “Artists could really get screwed here.”

Although OpenAI declined to reveal its secrets, artists and creatives feared the worst. Justine Bateman, a filmmaker and SAG-AFTRA generative artificial intelligence consultant, puts it bluntly. “Every nanosecond during this period #AI Trash is trained on stolen work from real artists,” release Bateman on “X.” “Disgusting,” she added.

Tweet may have been deleted

Others in the creative industries worry about how the rise of Sora and video generative models will impact their work. “I work in visual effects on movies and almost everyone I know is frustrated and frustrated and panicking about what to do now,” release @JimmyLansworth.

OpenAI hasn’t completely ignored Sora’s potentially explosive impact. But this mainly focuses on the potential harm involving deepfakes and misinformation. It is currently in the red team phase, which means it is being stress-tested to detect inappropriate and harmful content. Toward the end of its statement, OpenAI said it would “engage policymakers, educators, and artists around the world to understand their concerns and identify positive use cases for this new technology.”

But that doesn’t address the harm that may have occurred when “Sora” was originally made.

theme

Artificial IntelligenceOpenAI