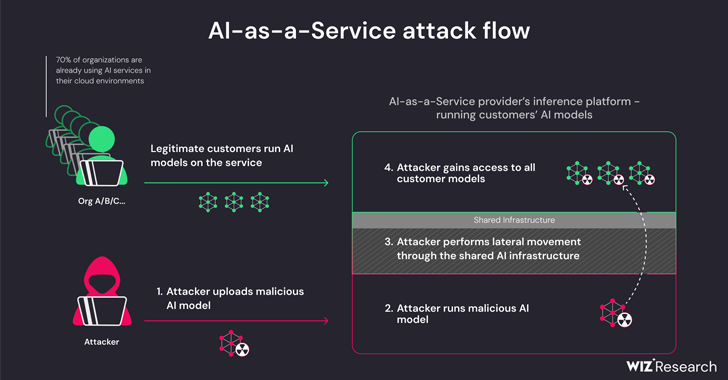

New research finds that artificial intelligence (AI) as a service providers such as Hugging Face are vulnerable to two key risks that could allow threat actors to escalate privileges, gain cross-tenant access to other customer models, or even take Action through continuous integration and continuous deployment (CI/CD) pipeline.

“Malicious models pose a significant risk to AI systems, especially AI-as-a-service providers, as potential attackers may exploit these models to conduct cross-tenant attacks,” said Wiz researchers Shir Tamari and Sagi Tzadik.

“The potential impact is devastating, as attackers may be able to access millions of private AI models and applications stored within AI-as-a-service providers.”

This development comes as machine learning pipelines emerge as a new supply chain attack vector, and repositories like Hugging Face become attractive targets for adversarial attacks aimed at gathering sensitive information and accessing target environments.

These threats are two-pronged and arise from shared inference infrastructure takeovers and shared CI/CD takeovers. They make it possible to run untrusted models uploaded to the service in pickle format and take over the CI/CD pipeline to perform supply chain attacks.

The cloud security company’s findings indicate that threats can be effectively enabled by uploading malicious models to compromise services running custom models and leveraging container escape techniques to escape from their own tenants and compromise the entire service. Customers can gain cross-tenant access to other customers’ models stored and run in Hugging Face.

“Hugging Face will still allow users to infer uploaded Pickle-based models on the platform infrastructure, even if it is considered dangerous,” the researchers explained.

This essentially allows an attacker to build a PyTorch (Pickle) model with arbitrary code execution capabilities at load time and connect it with a misconfiguration in Amazon Elastic Kubernetes Service (EKS) to gain elevated privileges and run the cluster Lateral movement within.

“If the secrets we obtained fell into the hands of malicious actors, it could have a significant impact on the platform,” the researchers said. “Secrets in shared environments can often lead to cross-tenant access and exposure of sensitive data.

To mitigate this issue, it is recommended to enable IMDSv2 with hop limits to prevent pods from accessing the Instance Metadata Service (IMDS) and acquiring the role of a node in the cluster.

The research also found that when running an application on the Hugging Face Spaces service, it is possible to achieve remote code execution through a specially crafted Dockerfile and use it to pull and push (i.e., overwrite) all available image registries on the inner container.

In a coordinated reveal, Hugging Face said it had fixed all identified issues. It also urges users to only use models from trusted sources, enable multi-factor authentication (MFA), and avoid using pickle profiles in production environments.

“This study demonstrates that using untrusted AI models, especially Pickle-based models, can lead to serious security consequences,” the researchers said. “Furthermore, if you plan to have users use untrusted AI models in your environment, Trusted AI models, then it’s important to ensure they run in a sandbox environment.”

Separate research from Lasso Security shows that generative AI models such as OpenAI ChatGPT and Google Gemini have the potential to distribute malicious (and non-existent) code suites to unsuspecting software developers.

In other words, the idea is to find recommendations for unreleased packages and publish Trojan packages in their place to spread the malware. The phenomenon of AI package hallucination emphasizes the need for caution when relying on large language models (LLMs) for coding solutions.

Artificial intelligence company Anthropic also detailed a new method called “multiple jailbreaks” that can be used to bypass the LLM’s built-in security protections by leveraging the model’s contextual window to respond to potentially harmful queries.

“The ability to enter more and more information has clear advantages for LLM users, but it also brings risks: jailbreaking vulnerabilities that exploit longer context windows,” the company said earlier this week.

In a nutshell, the technique involves introducing a large number of fake conversations between humans and AI assistants in a single prompt from the LL.M.A. in an attempt to “guide model behavior” and respond to queries that would not otherwise appear (e.g., “How to build a bomb?” “).