Game-playing AI models date back decades, but they typically focus on one game and always play to win. The latest invention from Google Deepmind researchers has a different goal: a model that can play multiple 3D games like a human, but also try its best to understand and act on your verbal commands.

Of course there are “AI” or computer characters that can do this kind of thing, but they are more like a function of the game: NPCs that you can control indirectly using formal in-game commands.

Deepmind’s SIMA (Scalable, Coachable Multi-World Agent) does not have any access to the game’s internal code or rules; instead, it is trained on many hours of video of human gameplay. Based on these data and the annotations provided by the data tagger, the model learns to associate certain visual representations of actions, objects, and interactions. They also recorded videos of players coaching each other on things to do in the game.

For example, it can understand from how pixels on the screen move in a certain pattern that this is an action called “move forward,” or when a character approaches a door-like object and uses an object that looks like a doorknob. This is what “opens” a door. ” Simple things like this, tasks or events that take a few seconds, but are more than just pressing a key or recognizing something.

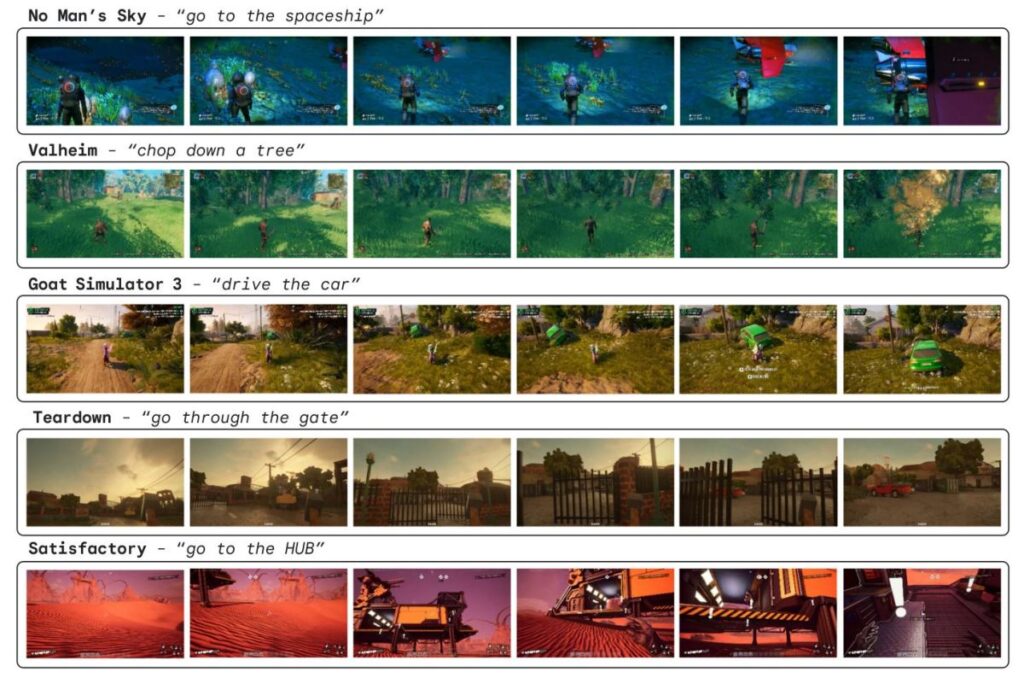

The training videos were shot in games such as Valheim and Goat Simulator 3, whose developers participated and agreed to use their software. One of the main goals is to see if training an AI to play one set of games enables it to play other games it has never seen, a process called generalization, the researchers said in a conference call with media.

The answer is yes, but there are some caveats. AI agents trained on multiple games performed better on games they had no exposure to. Of course, many games involve specific and unique mechanics or terminology that would hinder even the best-prepared AI. But there’s nothing stopping the model from learning these things, other than a lack of training material.

Part of the reason is that while there’s a lot of jargon in games, there are very few “verbs” that players have to actually influence the game world. Whether you’re assembling a pitched roof, pitching a tent, or conjuring a magical sanctuary, you’re actually “building a house,” right? So this map of the dozens of primitives currently recognized by the agent makes very interesting reading:

SIMA recognizes and can execute or combine the correspondences of dozens of operations.

In addition to fundamentally advancing the field of agent-based artificial intelligence, the researchers aim to create a more natural gaming partner than the rigid, hard-coded ones we have today.

“Instead of fighting against superhuman agents, you can have SIMA players next to you, and they can cooperate and you can give them instructions,” said Tim Harley, one of the project’s leaders.

Because when they play games, all they see are the pixels of the game screen, they have to learn how to do things in much the same way as us – but that also means they can adapt and develop emergent behaviors, too.

You might be wondering how this compares to the common approach to making agent-based AI, the simulator approach, where mostly unsupervised models run wild experiments in a 3D simulated world, running much faster than real time, Making it possible to intuitively learn rules and design behavior around them, requiring not nearly as much annotation work.

“Traditional simulator-based agent training uses reinforcement learning, which requires a game or environment to provide a ‘reward’ signal for the agent to learn — such as winning or losing in Go or StarCraft, or a ‘score,’ Harley told TechCrunch, noting This method was used in these games and produced amazing results.

“In the games we use, such as our partners’ commercial games,” he continues, “we do not have access to such reward signals. Furthermore, we are interested in agents that can complete the various tasks described in the open text— —Every game cannot evaluate the “reward” signal for every possible goal. Instead, we use imitation learning of human actions to train the agent based on the goals in the text. “

In other words, having a strict reward structure limits the goals the agent pursues, because if it is guided by the score, it will never try anything that doesn’t maximize that value. But if it values something more abstract, like how close its behavior is to behavior it has observed before, then it can be trained to “want” to do almost anything as long as the training material represents it in some way.

Other companies are also looking into this kind of open collaboration and creation; for example, conversations with NPCs are seen as an opportunity to put LLM-type chatbots to work. In some very interesting agent research, AI can also simulate and track simple improvised actions or interactions.

Of course there are experiments with infinite games like MarioGPT, but that’s another story entirely.