Guo Liwei, Anush Moorthy, Chen Liheng, Vinicius Carvalho, Aditya Mavlankar, Agata Opalach, Adithya Prakash, Kyle Swanson, Jessica Tweneboah, Subbu Venkatrav, Zhu Lishan

This is the first blog in a multi-part series about how Netflix is rebuilding its video processing pipeline using microservices so that we can maintain a rapid pace of innovation and continually improve our membership streaming and studio operations systems. This introductory blog highlights our journey. Future blogs will explore each service in more depth, sharing insights and lessons learned from the process.

The Netflix video processing pipeline came online with the launch of the streaming service in 2007. Since then, the video pipeline has undergone substantial improvements and extensive expansion:

- Starting with Standard Definition’s Standard Dynamic Range (SDR), we’ve expanded our encoding pipeline to 4K and High Dynamic Range (HDR) to support our premium products.

- We move from centralized linear encoding to decentralized block-based encoding. This architectural shift significantly reduces processing latency and increases system resiliency.

- Instead of using a limited number of dedicated instances, we took advantage of Netflix’s internal troughs due to auto-scaling of microservices, resulting in significant improvements in compute resiliency and resource utilization efficiency.

- We introduced coding innovations, such as per-title and per-shot optimizations, that deliver significant quality of experience (QoE) improvements for Netflix members.

- By integrating with studio content systems, we enable pipelines to leverage rich metadata on creatives and create more engaging member experiences, such as interactive storytelling.

- We expanded pipeline support to serve our studio/content development use cases, which have different latency and resiliency requirements compared to traditional streaming use cases.

Our experience over the past fifteen years has reinforced our belief that an efficient, flexible video processing pipeline that allows us to innovate and support our streaming services and our studio partners is critical to Netflix’s continued success. To this end, Encoding Technology’s (ET) video and imaging encoding team has spent the past few years rebuilding the video processing pipeline on Cosmos, our next-generation microservices-based computing platform.

reload

Since 2014, we have developed and operated video processing pipelines on the third-generation platform Reloaded. Reloaded is well-architected and provides good stability, scalability, and reasonable flexibility. It is the basis for many coding innovations developed by our team.

When designing Reloaded, we focused on one use case: converting high-quality media files (also known as mezzanines) received from studios into compressed assets for Netflix streaming. Reloaded was created as a single monolithic system, with developers from various media teams within ET and our platform partner team Content Infrastructure and Solutions (CIS)1 working on the same code base to build a single system that handles all media assets of a single system. Over the years, the system has been expanded to support a variety of new use cases. This leads to a significant increase in system complexity, and the limitations of Reloaded begin to appear:

- Coupling function: Reloaded consists of multiple working modules and an orchestration module. The setup of new Reloaded modules and their integration with orchestration requires a lot of work, which leads to a preference for enhancement rather than creation when developing new features. For example, in Reloaded, video quality calculations are implemented inside the video encoder module. With this implementation, it is extremely difficult to recalculate the video quality without re-encoding.

- monolithic structure: Since reloaded mods are often in the same repository, it’s easy to ignore code isolation rules and have a lot of unintentional code reuse across what should be strong boundaries. This reuse creates tight coupling and slows down development. The tight coupling between modules further forces us to deploy all modules together.

- Long release cycle: Federated deployments mean there is more concern about unexpected production outages, as debugging and rollback can be difficult for deployments of this size. This brings the “release train” approaching. Every two weeks, a “snapshot” of all modules is taken and promoted to “release candidates”. This candidate version was then exhaustively tested in an attempt to cover as much surface area as possible. This testing phase took approximately two weeks. Therefore, depending on when code changes are merged, it can take anywhere from two to four weeks to go into production.

As time went by and features grew, the contribution of new features in Reloaded declined. Some promising ideas were abandoned due to the excessive effort required to overcome architectural limitations. Platforms that once served us well are now becoming a drag on development.

universe

In response, the CIS and ET teams began developing the next-generation platform Cosmos in 2018. In addition to the scalability and stability developers already enjoyed in Reloaded, Cosmos is designed to significantly increase system flexibility and speed of feature development. To achieve this goal, Cosmos was developed as a computing platform for workflow-driven, media-centric microservices.

Microservice architecture provides strong decoupling between services. Per-microservice workflow support relieves the burden of implementing complex media workflow logic. Finally, the associated abstractions allow media algorithm developers to focus on the manipulation of video and audio signals rather than infrastructure issues. A full list of the benefits Cosmos offers can be found in the linked blog.

service boundary

In a microservices architecture, a system consists of many fine-grained services, each focused on a single function. So the first thing (and arguably the most important) is to determine the boundaries and define the service.

In our pipeline, as media assets go from creation to ingest to delivery, they go through a number of processing steps, such as analysis and transformation. We analyzed these processing steps to identify “boundaries” and grouped them into distinct domains, which in turn became the building blocks of the microservices we designed.

For example, in “Reloaded”, the video encoding module is bundled with 5 steps:

1. Divide the input video into small pieces

2. Encode each chunk independently

3. Calculate the quality score (VMAF) of each chunk

4. Assemble all encoded blocks into a single encoded video

5. Sum up the quality scores of all blocks

From a systems perspective, assembled encoded video is the primary concern, while internal chunking and separate chunk encoding exist to meet certain latency and resiliency requirements. Additionally, as mentioned above, video quality calculations provide completely independent functionality compared to encoding services.

Therefore, in Cosmos, we created two independent microservices: Video Encoding Service (VES) and Video Quality Service (VQS), each providing clear, decoupled functionality. As an implementation detail, chunked encoding and assembly are abstracted into VES.

video services

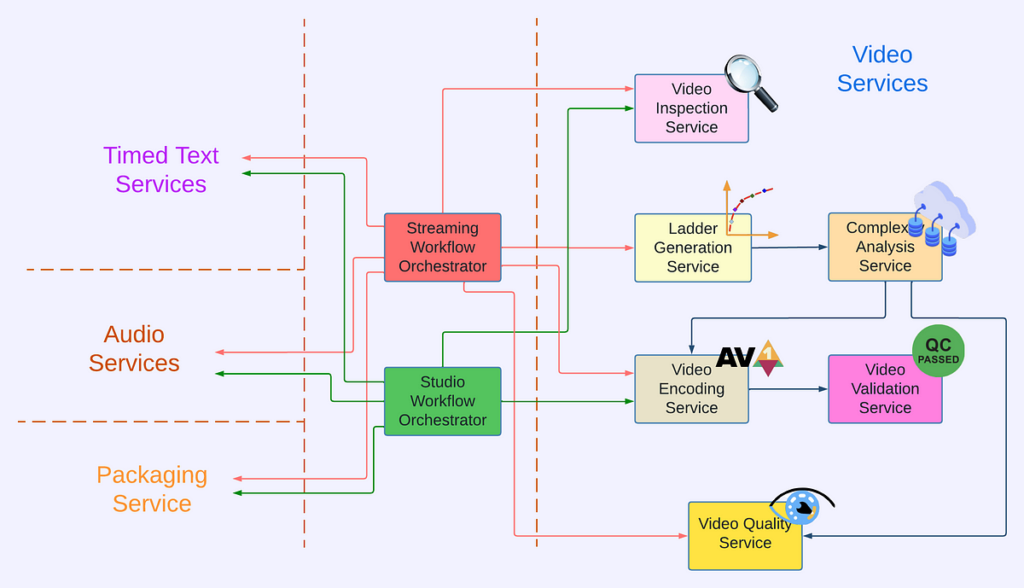

The above approach is applied to the rest of the video processing pipeline to identify functional and service boundaries, creating the following video service².

- Video Inspection Service (VIS): This service uses Mezzanine as input to perform various inspections. It extracts metadata from different layers of the mezzanine for downstream services. Additionally, if invalid or unexpected metadata is observed, the inspection service flags the issue and provides actionable feedback to the upstream team.

- Complexity Analysis Service (CAS): Optimal coding solutions are highly dependent on content. The service takes mezzanines as input and performs analysis to understand content complexity. It calls the video encoding service for precoding and the video quality service for quality assessment. Results are stored in a database so they can be reused.

- Ladder Generation Service (LGS): This service creates a complete bitrate ladder for a given encoding family (H.264, AV1, etc.). It obtains complexity data from CAS and runs optimization algorithms to create coding recipes. CAS and LGS cover most of the innovations we’ve covered previously on our technology blog (per-title, motion encoding, per-shot, optimized 4K encoding, etc.). By encapsulating ladder generation into a separate microservice (LGS), we separate the ladder optimization algorithm from the creation and management of complexity analysis data (which resides in CAS). We hope this will give us greater freedom to experiment and innovate faster.

- Video Encoding Service (VES): This service takes mezzanine and encoding recipes and creates encoded videos. The recipe includes the required encoding format and output properties such as resolution, bitrate, etc. The service also provides options that allow fine-tuning of latency, throughput, etc. based on the use case.

- Video Verification Service (VVS): This service uses coded video and a wish list regarding coding. These expectations include the properties specified in the encoding recipe and the conformance requirements of the codec specification. VVS analyzes the encoded video and compares the results with specified expectations. Any discrepancies will be flagged in the response to alert the caller.

- Video Quality Service (VQS): This service takes mezzanine and encoded video as input and calculates the encoded video quality score (VMAF).

service orchestration

Each video service provides specialized functionality, and they work together to produce the required video assets. Currently, the two main use cases for Netflix’s video pipeline are streaming for members and operating production assets for studios. For each use case, we build a dedicated workflow orchestrator so that the service orchestration can be customized to best meet the corresponding business needs.

For streaming use cases, the resulting videos will be deployed to our Content Delivery Network (CDN) for use by Netflix members. These videos can easily be viewed millions of times. Streaming workflow orchestrator leverages virtually any video service to create streams to provide an impeccable member experience. It utilizes VIS to detect and reject substandard or low-quality mezzanines, calls LGS for encoding recipe optimization, uses VES to encode video, and calls VQS for quality measurement, where quality data is further fed into Netflix’s data pipeline for analysis and monitoring. In addition to video services, the Streaming Workflow Orchestrator uses audio and timed text services to generate audio and text assets, and packaging services to “containerize” streaming assets.

For studio use cases, some example video assets are marketing clips and daily production editing agents. Requests from studios are often latency sensitive. For example, someone on the production team might be waiting for the film to be reviewed so they can decide on the next day’s shooting schedule. Therefore, Studio Workflow Orchestrator is optimized for quick turnaround and focused on core media processing services. At this point, Studio Workflow Orchestrator calls VIS to extract the metadata of the ingested assets and calls VES using predefined recipes. Compared with member live broadcasts, studio operations have different and unique requirements for video processing. Therefore, Studio Workflow Orchestrator is the exclusive user of certain encoding features such as forensic watermarking and timecode/text burning.